Putting the p-value in Context

Neuroscience researchers typically report p-values to express the strength of statistical evidence; but p-values are not sufficient on their own to understand the meaning and value of a scientific inference. In this unit, learners will learn how to interpret the p-value, how to express the size of an effect and uncertainty about a result, and how to interpret results at both the individual and population levels.

1 - You want to do some science; your PI just wants the p’s!

Introduction

You work in a sleep lab studying the effect of a new treatment regimen on memory consolidation during sleep.

Your lab collects an EEG biomarker of memory (sleep spindles) from N=20 human subjects.

To do so, your lab measures the power in the spindle band (9-15 Hz) twice per minute. Your lab has a reliable method to detect spindle activity; this detector is known to have small measurement errors outside of treatment. You expect it to still work during treatment, but also expect more variability in the spindle power estimates (hence more variability in the detections) during treatment.

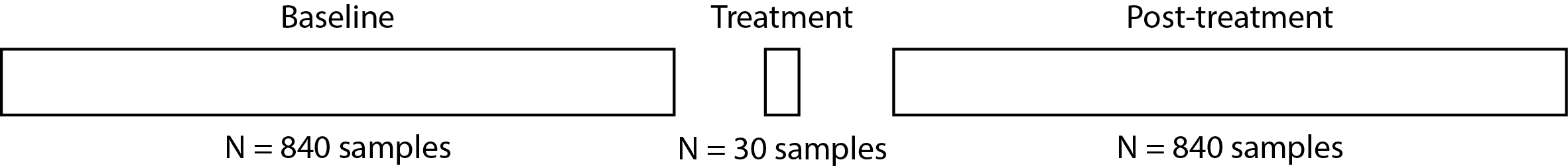

For each subject, your lab measures spindle activity during three conditions:

Baseline: Data collection lasts 7 hours while the subject sleeps the night before the intervention. This results in 840 samples of spindle activity for each subject.

During Treatment: Data collection during a 15 minute intervention during sleep, resulting in 30 samples of spindle activity for each subject.

Post-treatment: Data collection after intervention lasts 7 hours, while the subject sleeps, resulting in 840 samples of spindle activity for each subject.

Here’s a graphical representation of the data collected from one subject:

Your PI says: “I hypothesize that some subjects will show an increase in spindle activity as a result of this treatment. Other subjects may not respond to the treatment. Conduct a hypothesis test for each subject to determine if they are responsive and report the p-values associated with each test.”

Fundamentally, p-values indicate how incompatible a data set is with a specified statistical model, but they do not express a probability that any scientific hypothesis is correct or whether on hypothesis is more likely true than another. P-values can be part of a strong statistical argument, but do not provide a robust measure of evidence about a hypothesis on their own. In particular, a p-value needs to be paired with a measure of effect size to describe whether an effect is scientifically meaningful.

Another important issue is that p-values are often only meaningful in cases where the scientific question to answer has only a binary outcome. Most scientific questions require more than yes or no answers, but it is common to see researchers try to shoehorn their experiments to produce binary outcomes just so that they can express their results using p-values. This risks throwing away useful information and decreasing the statistical power of an argument.

Based on your understanding of how to interpret p-values, are there any concerns about your PIs analysis plan to just report p-values for separate tests conducted on each subject? What other approaches could you use to evaluate the effect of treatment in the subject population?

At this point, perhaps you feel that we should just get rid of p-values entirely. Good idea! Many researchers and statisticians agree with you. But despite multiple organized efforts to downplay the use of p-values in scientific research, a focus on computing and reporting p-values has persisted.

You have a detailed discussion with your PI about the issues with focusing on p-values for this study, but your PI says: “We’re not going to be able to publish anything unless we show statistical significance so just give me the p’s!”

2- Let’s do it: Define & compute p-values.

Before we compute p-values, let’s consider what a p-value means.

What does a p-value mean?

A p-value is used to compare two competing hypotheses. If our scientific hypothesis is that spindle activity changes during treatment relative to its baseline level of activity, we need another hypothesis to compare this to. In this case, we can hypothesize that the spindle activity does not change during treatment. This is called the null hypothesis.

Our goal is to collect data that provides evidence in favor of our scientific hypothesis over the null hypothesis. But this is not a fair fight; we start by assuming that the null hypothesis is true and only reject it after we achieve a sufficiently high bar of evidence. The p-value tells us how high a bar we have achieved.

One useful analogy is proof by contradiction. There, we assume that a hypothesis is true and show that this assumption leads to a contraction. If we were to observe data that could not possibly occur if the null hypothesis were true, this would be definitive evidence against that hypothesis. However, it is not the case that if we observe data that is unlikely if the null hypothesis were true then the null hypothesis is itself unlikely.

For example, most people would agree with the following statement, “If a person is American, they probably are not the US President.” Now imagine that we select an individual at random and they happen to be the US President. It is clearly not the case that this individual is probably not American. While the observation that this individual is the US president is unlikely under the null hypothesis that this individual is American, it is much more unlikely (or impossible) under the alternate hypothesis that they are not American.

Another useful analogy is to a prosecutor at a trial. In this analogy, the null hypothesis is akin to the hypothesis that the defendant is innocent. The court assumes that defendant is innocent until proven guilty. The prosecutor tries to amass and present evidence to demonstrate that the defendant is guilty beyond a reasonable doubt. A strong argument needs to include evidence that would be unlikely to occur if the hypothesis that the defendant is innocent is true, and more likely to occur if the hypothesis that the defendant is guilty is true. If the prosecutor fails to provide sufficient evidence that the defendant is guilty, it doesn’t necessarily mean that they are innocent.

In a statistical test, the p-value indicates how surprising our evidence would be if the null hypothesis were true. For our problem, if we’re sufficiently surprised by the observed data, then we’ll reject the null hypothesis, and conclude that we have evidence that the spindle activity changes relative to baseline.

Alternatively, if we’re not surprised by the observed data, then we’ll conclude that we lack sufficient evidence to reject the null hypothesis. There’s an important subtlety here that statisicians like to point out - when we’re testing this way, we never accept the null hypothesis. Instead, the best we can do is talk like a statistician and say things like “We fail to reject the null hypothesis”. In our court analogy, this is equivalent to finding the defendant not guilty rather than innocent, because we realize that it is possible that defendant committed the crime but we lacked the evidence to convince a jury beyond a reasonable doubt.

Multiple factors impact the evidence we have to reject a null hypothesis. In this Unit, we’ll explore these factors and how they influence the p-values we compute.

What does p<0.05 mean?

The probability of observing the data, or something more extreme, under the null hypothesis is less than 5%. This is typically considered sufficient evidence to reject the null hypothesis in favor of the alternative hypothesis (which posits that there is an effect or a difference). In other words, a p-value less than 0.05 suggests that the observed data is unlikely to have occurred by random chance alone, assuming the null hypothesis is true, leading researchers to reject the null hypothesis.

In our case, the null hypothesis we will first investigate is:

Null hypothesis: No difference in average spindle activity between treatment and baseline conditions.

(Select all that apply)

Now, let’s load the spindle data and compute p-values to test our null hypothesis

Let’s start by investigating the structure of the data.

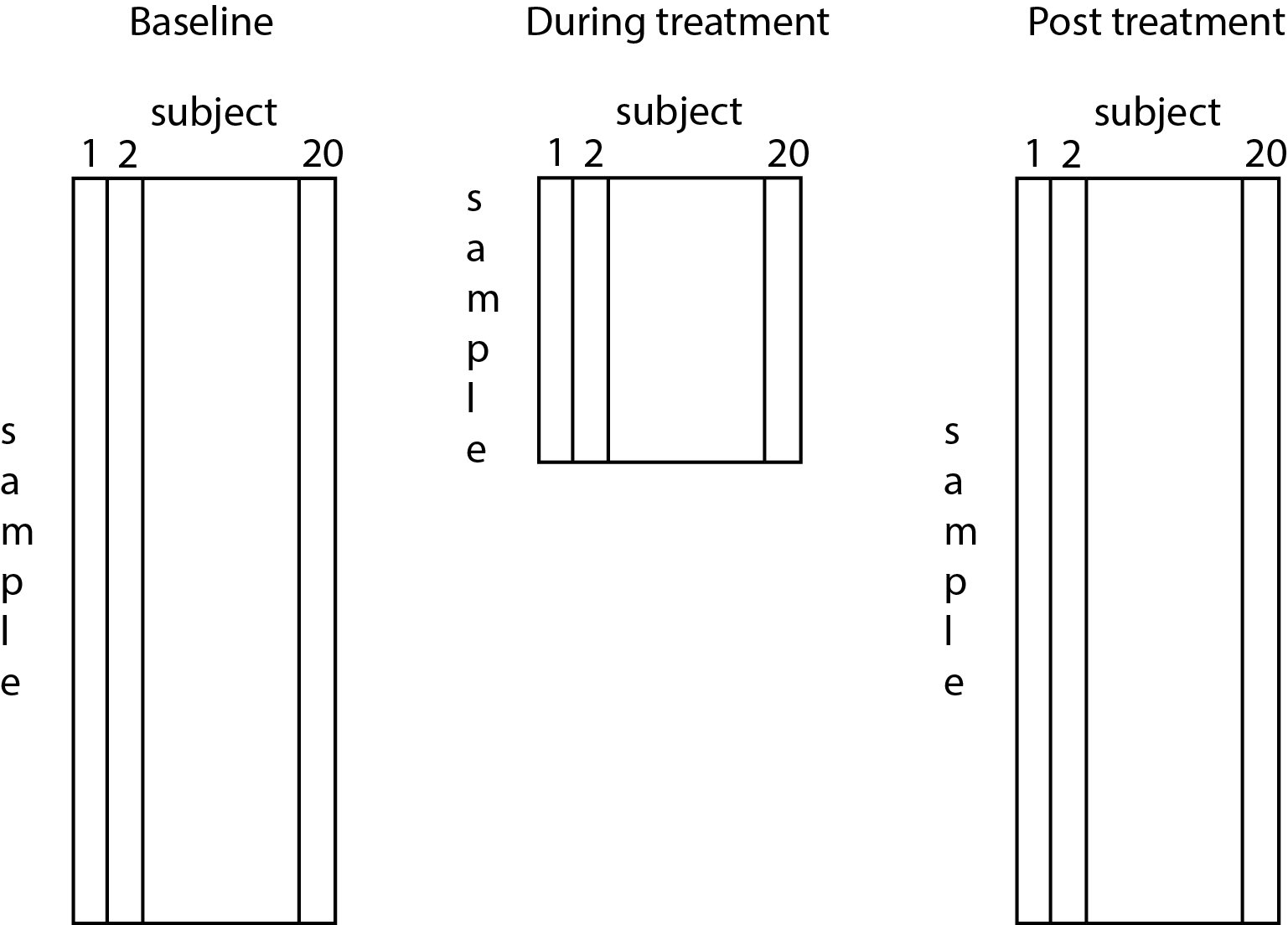

All three variables consist of observations from 20 subjects (the number of columns).

During baseline: We collect 840 samples per subject.

During treatment: We collect 30 samples per subject.

After treatment: We collect 840 samples per subject.

The number of samples is the number of rows for each variable.

You might think of these variables as rectangles (or matrices) - each row indicates a sample of spindle activity, and each column indicates a subject:

To get a sense for the the data, let’s plot the spindle activity during baseline, treatment, and post-treatment conditions for one subject:

Here, the spindle activity has been z-scored during each recording interval relative to baseline.

So, the values we observe indicate changes relative to the mean baseline spindle activity.

Positive (negative) values indicate increases (decreases) in spindle activity relative to the baseline activity.

Null hypothesis: No difference in average spindle activity between treatment and baseline conditions.

Is there a significant effect treatment? Let’s now compute some p-values.

To do so, we again assume the null hypothesis:

Null hypothesis: No difference in average spindle activity between treatment and baseline conditions.

To test this hypothesis, we’ll compute a two-sample t-test.

The two-sample t-test is by far the most popular choice when comparing two distribution.

We use this method method used to determine if there’s a significant difference between the means of two independent groups. It’s commonly applied to compare the average values of (continuous) variables across two different populations or conditions.

In our case, we’d like to compare the average spindle activity between treatment and baseline conditions. So, the two-sample t-test is (at first glance) a completely reasonable approach.

The list above consists of 20 p-values, one for each subject.

Each p-value indicates the probability of observing the data, or something more extreme, under the null hypothesis:

Null hypothesis: No difference in average spindle activity between treatment and baseline conditions.

Let’s print the p-values for each subject:

Let’s also plot the p-values:

Do we have evidence to reject the null hypothesis?

Maybe … if we had performed one statistical test, then we typically reject the null hypothesis if

p < 0.05

But here we compute 20 test (one for each subject).

When we perform multiple tests, it’s important we consider the impact of multiple comparisons. We cover this topic in detail in the Multiplicity Unit.

Here we’ll chose a specific approach to deal with multiplicity - we’ll apply a Bonferroni correction. The Bonferroni correction reduces the Type I error rate by dividing the desired overall significance level (here 0.05) by the number of tests performed (here 20 tests, one test per subject). Stated simply, the Bonferroni test adjusts the significance level by dividing it by the number of tests we perform. Doing so reduces the risk of false positives (Type I errors); or more information, see Multiplicity Unit.

So, for our analysis of the p-values from 20 subjects, let’s compare the p-values to a stricter threshold of

p < 0.05 / 20 or p < 0.0025

Thresholding in this way provides a binary, yes/no answer to the question: do we have evidence that the spindle activity during treatment differs from 0?

Let’s plot the p-values versus this new threhsold.

Summary:

We’ve computed a p-value for each subject. After correcting for multiple comparisons, our intial results suggest no evidence that we can reject the null hypothesis (of no difference in spindle activity between baseline and treatment).

Mini Summary & Review

We sought to answer the scientific question:

- Does the spindle activity during

treatmentdiffer from thebaselinespindle activity?

To answer this question, we assumed a null hypothesis:

Null hypothesis: No difference in average spindle activity between treatment and baseline conditions.

We tested this null hypothesis for each subject, computing a p-value for each subject.

Because we computed 20 p-values (one for each subject), we corrected for multiple comparsions using a Bonferroni correction (see Multiplicity Unit).

We found no p-values small enough to reject the null hypothesis.

In other words, using our initial approach, we found no evidence that the spindle activity during treatment differs from baseline.

(Select all that apply)

3- Maybe there’s something else we can publish?

Our initial results are discouraging; we find no evidence of a change in spindle activity from baseline during treatment.

That’s dissappointing. Rather than abandon our data (which took years to collect), our PI asks us to continue exploring the data.

Data exploration is common in neuroscience. In general, as practicing neuroscientists, we explore our data for interesting features.

However, when undertaking data exploration, we must make it clear (e.g., by reporting what we explored, whether the results are significant or not).

Our PI recommends that we examine the change in spindle activity post-treatment.

Perhaps the treatment produces a longer-term affect that manifests during the post-treatment period.

Exploratory vs Confirmatory Analyses & Guarding Against p-Hacking

Our initial results are discouraging: we find no evidence of a change in spindle activity from baseline during treatment.

Instead of discarding years of data, our PI encourages us to explore the data for unexpected patterns — this is perfectly legitimate as long as we remain transparent.

Data exploration helps generate new hypotheses. We might notice trends, outliers, or condition-specific features that suggest where real effects could lie, to help guide future experiments.

P-hacking occurs when we repeatedly mine the data—trying different subsets, covariates, or outcomes—until something “significant” emerges. This inflates false positives and misleads follow-up studies.

To stay honest, every exploratory analysis must be clearly labeled as such. We should report exactly what we tested (e.g. “we examined spindle rate in the baseline, treatment, and post-treatment intervals), and include both significant and nonsignificant findings.

Confirmatory analysis comes later: once exploration suggests a specific hypothesis (for example, an increase in post-treatment spindle rates), we pre-register that test or validate it in a fresh dataset. Only then do p-values carry their usual weight.

Our next step—examining post-treatment spindle activity—serves as a bridge. We explore here, but plan to follow up with a dedicated, confirmatory protocol before drawing firm conclusions.

For our new analysis, our null hypothesis now focuses on the post-treatment data:

Null hypothesis: Mean spindle activity post-treatment differs from the mean spindle activity during baseline.

(Select all that apply)

During the post-treatment condition:

we have many more samples to analyze (N=840) compared to the

treatmentcondition (N=30).the spindle estimates are less noisy compared to the

treatmentcondition

(Select all that apply)

Let’s repeat our previous analysis, but now examine the post-treatment spindle activity.

Let’s print and plot the p-values for each subject:

Because we’ve computed 20 p-values (one from each subject), let’s again correct for multiple compariosns using a Bonferroni correction (see Multiplicity Unit).

Look at how small the p-values are post-treatment!

- All 20 p-values post-treatment are less than 0.05/20, the Bonferroni corrected p-value threshold.

Remembe that, during treatment, the p-values are much larger, and we find no p-values less than 0.05/20.

We find many more significant p-values post-treatment (20 out of 20, after Bonferroni correction).

Our results seem to reveal a new conclusion:

In Mini 2, we found no evidence of a change in spindle activity

during treatment.In this Mini, we find many very small p-values (less than 0.05/20)

post-treatment.

More specifically, we find evidence of a significant change in spindle activity post-treatment in 9/20 subjects.

The PI is very excited with our new results, which appear to upend the literature.

The PI drafts the title for a high-impact paper:

Draft paper title: Post-Treatment Paradox: Clear Human Responses, Despite Absence of Treatment Effect

But are we sure?

(Select all that apply)

This is a very important question … and we haven’t fully answered it yet.

We collect many more samples post-treatment, and our measurements are more accurate post-treatment compared to during treatment.

Both of these features impact the evidence we collect to reject the null hypothesis.

So, are you sure about the post-treatment results?

Alert:

- Wait, I’m not so sure …

- Why did you ask me to review the characteristics of the data, and think about how this might impact the data?

Mini Summary & Review

We sought to answer the scientific question:

- Does the spindle activity

post-treatmentdiffer from thebaselinespindle activity?

To answer this question, we assumed a null hypothesis:

Null hypothesis: No difference in average spindle activity between post-treatment and baseline conditions.

We tested this null hypothsis for each subject, computing a p-value for each subject.

Because we computed 20 p-values (one for each subject), we corrected for multiple comparsions using a Bonferroni correction (see Multiplicity Unit).

We found all of the p-values were small enough to reject the null hypothesis.

In other words, in this exploratory analysis, we found evidence that the spindle activity during post-treatment differs from baseline.

This differs from our results during treatment, in which we found no evidence that the spindle activity during treatment differs from baseline.

4- Not so fast: visualize the measured data, always.

In our previous analysis, we may have found an interesting result: spindle activity post-treatment, but not during treatment, differs from baseline.

Scientifically, we might conlcude that our treatment has a long-lasting effect, impacting spindle activity post-treatment.

To infer these results, we computed and compared p-values, testing specific null hypotheses for each subject.

We’ve hinted above that something isn’t right … let’s now dive in and identify what we could have done differently.

Our initial approach has focused exclusively on p-values.

P-values indicate how much evidence we have to reject a null hypothesis given the data we observe.

Let’s again plot the p-values during treatment and post-treatment for each subject:

We’ve focused on p-values to draw our scientific conclusions.

However, we’ve almost completely ignored the spindle measurements themselves!

Let’s return to the spindle activity measurements themselves, and see how these measurements relate to the p-values.

Now, let’s return to the spindle activity and look at those values directly.

Let’s begin with an example from Subject 6.

For Subject 6, we found:

treatmentp=0.033post-treatmentp=0.0021

From these p-values, we might expect:

Spindle activity during

treatmentnear 0 (i.e., similar tobaseline).Spindle activity

post-treatmentfar from 0 (i.e., different frombaseline).

But, we find the opposite.

Spindle activity during

treatmentfar from 0 (i.e., different frombaseline).Spindle activity

post-treatmentnear 0 (i.e., similar tobaseline).

Let’s make similar plots for all 20 subjects.

It’s nice to visualize all of the data, but doing so can also be overwhelming.

Let’s summarize the spindle activity in for each subject by ploting the mean and the standard error of the mean.

Let’s summarize what we’ve found so far:

| State | p-values | spindle activity |

|---|---|---|

| During treatment | p>0.05/20 (not significant) | mean spindle activity > 0 |

| Post-treatment | p<<0.05/20 (signficiant) | mean spindle activity \(\approx\) 0. |

Something’s not adding up here …

During

treatment, we find no evidence of a signficant change in spindle activity from baseline (i.e., the p-values are big). However, looking at the mean spindle activity, we find spindle activities that often exceed 0.Post-treatment, we find evidence of a signficant change in spindle activity from baseline (i.e., the p-values are small) in each subject. However, looking at the mean spindle activity, we find those values tend to appear near 0.

So, why do the spindle activities during treatment often exceed 0 (i.e., exceed baseline) spindle activity, but p>0.05?

And, why are the post-treatment spindle activities so near 0 (i.e., so near the baseline) spindle activity, but p<<0.05?

I’m confused!

Alert:

These confusing conclusions occur because we’ve made two common errors:

We compared p-values between the

treatmentandpost-treatmentgroups.We focused exclusively on p-values without thinking more carefully about the data used to compute those p-values.

To resolve these confusing conclusions, let’s think more carefully about what the p-value represents.

The p-value measures the strength of evidence against the null hypothesis.

Three factors can impact the strength of evidence:

Sample Size (i.e., the number of observations).

Effect Size (i.e., bigger differences in spindle activity between conditions are easier to detect.)

Variability (or Noise) in Measurements: (i.e., how reliably we measure spindle activity).

treatment versus post‐treatment? How might this impact the results?

(Select all that apply)

treatment versus post-treatment? How might this impact the results?

(Select all that apply)

treatment versus post-treatment? How might this impact the results?

(Select all that apply)

Conclusion / Summary / Morale:

We began with the scientific statement:

“I expect during treatment that spindle activity exceeds the baseline spindle activity.”

Our initial approach focused on computing and comparing p-values.

That’s a bad idea.

We’re not interested in comparing the evidence we have for each null-hypothesis (the p-value); the evidence depends on the sample size, effect size, and measurement variability.

Instead, we’re more interested in comparing the spindle activity between condidtions.

In other words, we’re intested in the effect size, not the p-value.

This observation suggests a different analysis path for an improved approach.

We can answer the same scienfitic question by comparing the spindle activities between conditions, not the p-values.

We’ve started to see this in the plots of spindle activity at baseline, during treatment, and post-treatment.

For more analysis (e.g., different statistical test and effect size) continue on to the next sections.

5- So, what went wrong?

In our initial analysis, we’ve made a couple of common mistakes.

Mistake #1: Confusing p-values with effect size.

- A p-value indicates the amount of statistical evidence, not the effect size. In our analysis, we found small p-values when comparing the spindle rates at baseline versus post-treatment. However, the effect size was very small; the large number of observations increased our statistical evidence, allowing us to detect a small effect size.

Remedy #1: Estimate what you care about.

- We’re interested in the effect during treatment; so, examine the spindle rate during treatment. Plotting the mean spindle rate, and standard error of the mean, during treatment allows us to directly estimate the effect of interest.

Mistatke #2: Comparing p-values instead of data.

- We found much smaller p-values post-treatment compared to during treatment (both computed versus the same baseline). Therefore, the effect is “stronger” post-treatment, right? WRONG! The p-values indicate that we have more statistical evidence of a difference post-treatment, but not that the effect size is strong.

Remedy #2: Compare the effect sizes.

- Comparing the effect sizes, we find larger means spindle rates during treatment compared to baseline (and post-treatment)

Mistake #3: Separate statistical tests for each subject.

- Our scientific question was, initially, focused on the impact of treatment on spindle rate. We’re not necessarily interested in this for each individual subject [URI HELP!]

Remedy #3: Use an omnibus test.

- See the next section to learn more.

6- One test to rule them all: an omnibus test.

(PENDING)

Do there exist subjects for which there is a significant effect? NO

- Concatenate data from all subjects (works if you beleive everyone has an effect).

- show CI, provide associated p-values 1a. Treatment (significant & meaningful @ population level) 1b. Post ( significant & not meaningful @ population level)

- If not everyone has an effect, dilute effect size / less power.

- Alternartive, if you believe not everyone has an effect, mixed-effect model.

7 - Optional Section: LME

(PENDING)

Estimate effect size and responders

In Intro: initial H is some people respond and some don’t

8- Summary

(PENDING)